|

I am a PhD student in the Autonomous Learning Robots (ALR) at the Karlsruhe Institute of Technology (KIT), Germany. My research focuses on robotics and machine learning supervised by Gerhard Neumann and Rudolf Lioutikov. Email / Google Scholar / Github / |

|

|

My primary research goal is to build intelligent embodied agents that assist people in their everyday lives and communicate intuitively. One of the key challenges to be solved towards this goal is learning from multimodal, uncurated human demonstrations without rewards. Therefore, I am working on novel methods that exploit multimodality and learn versatile behaviour. Representative papers are highlighted. |

Xiaogang Jia, Denis Blessing, Xinkai Jiang, Moritz Reuss, Atalay Donat, Rudolf Lioutikov , Gerhard Neumann ICLR 2024 OpenReview Introducing D3IL, a novel set of simulation benchmark environments and datasets tailored for Imitation Learning, D3IL is uniquely designed to challenge and evaluate AI models on their ability to learn and replicate diverse, multi-modal human behaviors. Our environments encompass multiple sub-tasks and object manipulations, providing a rich diversity in behavioral data, a feature often lacking in other datasets. We also introduce practical metrics to effectively quantify a model's capacity to capture and reproduce this diversity. Extensive evaluations of state-of-the-art methods on D3IL offer insightful benchmarks, guiding the development of future imitation learning algorithms capable of generalizing complex human behaviors. |

Moritz Reuss, Maximilian Li, Xiaogang Jia, Rudolf Lioutikov Best Paper Award @ Workshop on Learning from Diverse, Offline Data (L-DOD) @ ICRA 2023, Robotics: Science and Systems (RSS), 2023 project page / Code / arXiv We present a novel policy representation, called BESO, for goal-conditioned imitation learning using score-based diffusion models. BESO is able to effectively learn goal-directed, multi-modal behavior from uncurated reward-free offline-data. On several challening benchmarks our method outperforms current policy representation by a wide margin. BESO can also be used as a standard policy for imitation learning and achieves state-of-the-art performance with only 3 denoising steps. |

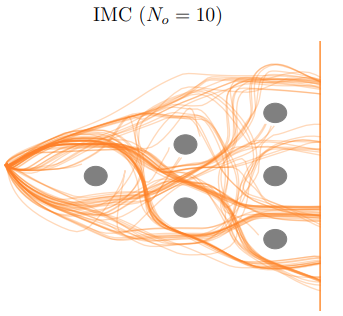

Denis Blessing, Onur Celik, Xiaogang Jia, Moritz Reuss, Maximilian Xiling, Rudolf Lioutikov , Gerhard Neumann Thirty-seventh Conference on Neural Information Processing Systems (NeurIPS) , 2023 arXiv We introduce the Information Maximizing Curriculum method to address mode-averaging in imitation learning by enabling the model to specialize in representable data. This approach is enhanced by a mixture of experts (MoE) policy, each focusing on different data subsets, and employs a unique maximum entropy-based objective for full dataset coverage. |

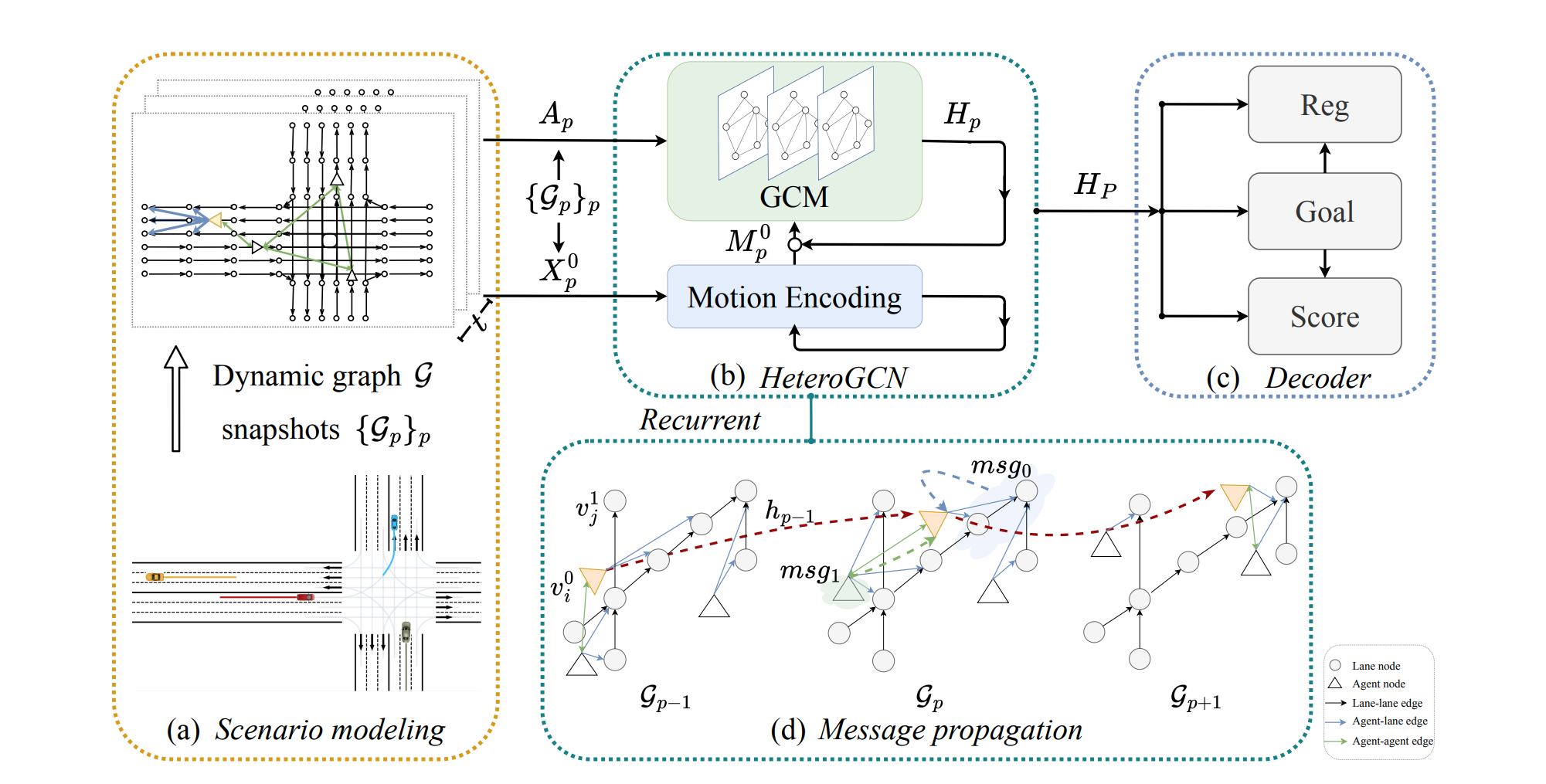

Xing Gao, Xiaogang Jia, Yikang Li, Hongkai Xiong IEEE Robotics and Automation Letters, 2023 arXiv In this paper, we resort to dynamic heterogeneous graphs to model the scenario. Various scenario components including vehicles (agents) and lanes, multi-type interactions, and their changes over time are jointly encoded. Furthermore, we design a novel heterogeneous graph convolutional recurrent network, aggregating diverse interaction information and capturing their evolution, to learn to exploit intrinsic spatio-temporal dependencies in dynamic graphs and obtain effective representations of dynamic scenarios. Finally, with a motion forecasting decoder, our model predicts realistic and multi-modal future trajectories of agents and outperforms state-of-the-art published works on several motion forecasting benchmarks. |

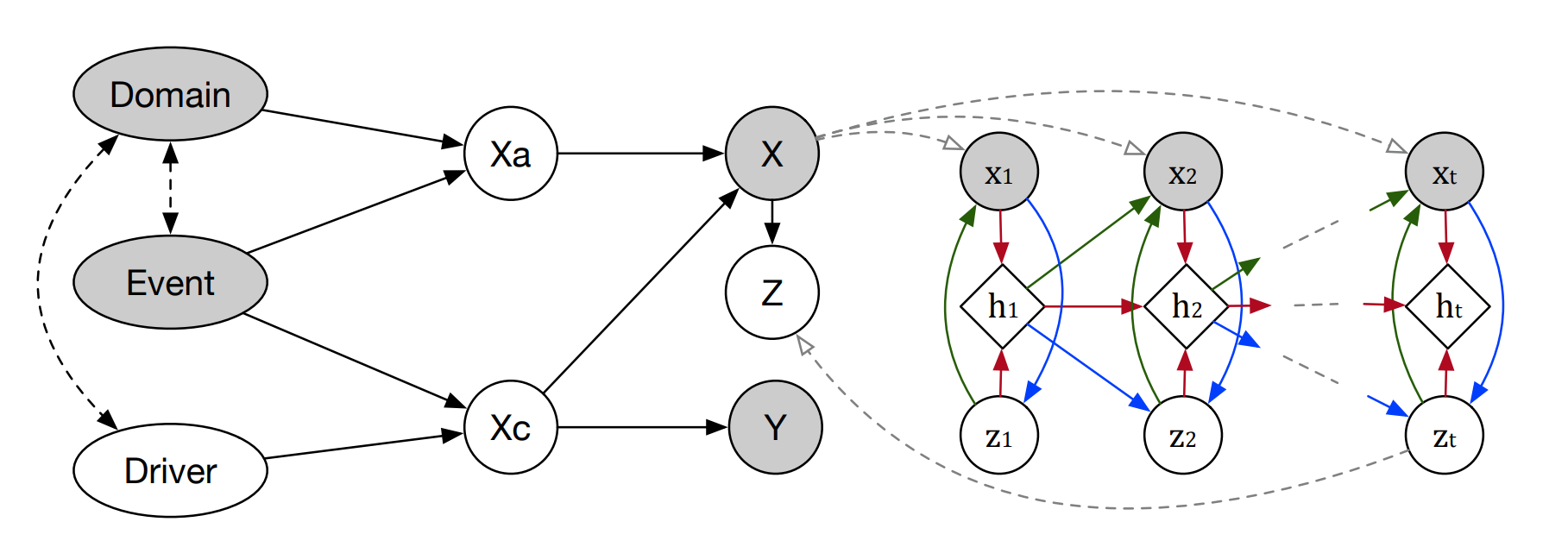

Yeping Hu, Xiaogang Jia, Masayoshi Tomizuka, Wei Zhan International Conference on Robotics and Automation (ICRA), 2022 arXiv We construct a structural causal model for vehicle intention prediction tasks to learn an invariant representation of input driving data for domain generalization. We further integrate a recurrent latent variable model into our structural causal model to better capture temporal latent dependencies from time-series input data. The effectiveness of our approach is evaluated via real-world driving data. |

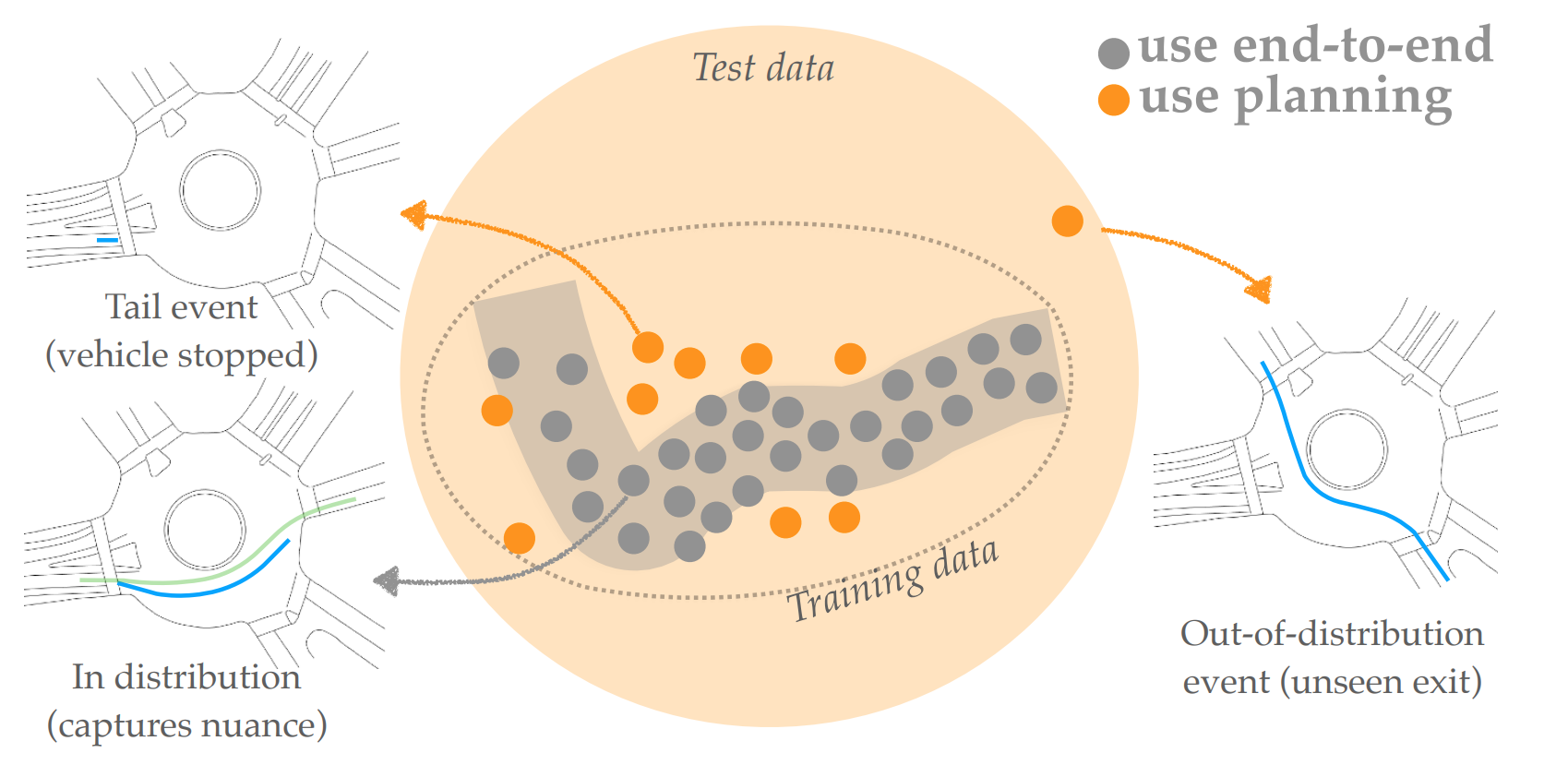

Liting Sun, Xiaogang Jia, Anca D Dragan Robotics: Science and Systems (RSS), 2021 arXiv In this work, we analyze one family of approaches that strive to get the best of both worlds: use the end-to-end predictor on common cases, but do not rely on it for tail events / out-of-distribution inputs -- switch to the planning-based predictor there. We contribute an analysis of different approaches for detecting when to make this switch, using an autonomous driving domain. We find that promising approaches based on ensembling or generative modeling of the training distribution might not be reliable, but that there very simple methods which can perform surprisingly well -- including training a classifier to pick up on tell-tale issues in predicted trajectories. |

|

The website is based on the code from source code! |